Gemma 2 Instruction Template Sillytavern

Gemma 2 Instruction Template Sillytavern - The shorter side is two spans, so we need to multiply the length of one span by 2. This is the main way to format your character card for text completion and instruct models. Web where to get/understand which context template is better or should be used. Gemma is based on google deepmind gemini and has a context length of 8k tokens: Added template for gemma 2. For example, there is a model mythomax. Explore pricing docs blog changelog sign in get started pricing docs blog changelog sign in get started Is sillytavern using the correct instruction style? Make sure to grab both context and instruct templates. Both have some presets for popular model families. Web **so what is sillytavern?** tavern is a user interface you can install on your computer (and android phones) that allows you to interact text generation ais and chat/roleplay with characters you or the community create. Added template for gemma 2. It should significantly reduce refusals, although warnings and disclaimers can still pop up. Web the official template to use for this model should be the mistral instruction template, the one that uses [inst] and [/inst] i've found the mistral context and instruct templates in sillytavern to work the best. There are two major sections: Sillytavern is a fork of tavernai 1.2.8 which is under more active development, and has added many major features. The template supports handlebars syntax and. Web gemma 2 is google's latest iteration of open llms. Make sure to grab both context and instruct templates. This is the main way to format your character card for text completion and instruct models. This is the main way to format your character card for text completion and instruct models. The reason for this, is because i explicitly set for them to be sent. Web debased ai org 23 days ago. Indicating roles in a conversation, such as the system, user, or assistant roles. Let’s load the model and apply the chat template to a conversation. Web we know that one span is 12 cm long. Updated sampling parameter presets to include default values for all settings. I've uploaded some settings to try for gemma2. Gemma2 censorship seems really deeply embedded. Now supports token counting endpoint. Web change your instruction template at sillytavern settings to roleplay. Added gemma 2 and llama 3.1 models to the list. The models are trained on a context length of 8192 tokens and generally outperform llama 2 7b and mistral 7b models on several benchmarks. Web the official template to use for this model should be the mistral instruction template, the. Changing a template resets your system prompt to default! The formatter has two purposes: Read it before bothering people with tech support questions. Web **so what is sillytavern?** tavern is a user interface you can install on your computer (and android phones) that allows you to interact text generation ais and chat/roleplay with characters you or the community create. The. 👌 ok first passed with success. Indicating roles in a conversation, such as the system, user, or assistant roles. These settings control the sampling process when generating text using a language model. Web where to get/understand which context template is better or should be used. Added gemma 2 and llama 3.1 models to the list. Is sillytavern using the correct instruction style? Both have some presets for popular model families. The models are trained on a context length of 8192 tokens and generally outperform llama 2 7b and mistral 7b models on several benchmarks. Web the official template to use for this model should be the mistral instruction template, the one that uses [inst] and. Sillytavern's interaction with the llm is configured in the ai response formatting window (the 3rd button at the top). Web the official template to use for this model should be the mistral instruction template, the one that uses [inst] and [/inst] i've found the mistral context and instruct templates in sillytavern to work the best. (but it was very simple). Is sillytavern using the correct instruction style? Let’s load the model and apply the chat template to a conversation. Explore pricing docs blog changelog sign in get started pricing docs blog changelog sign in get started It should look something like this. For example, there is a model mythomax. Indicating roles in a conversation, such as the system, user, or assistant roles. Sillytavern's interaction with the llm is configured in the ai response formatting window (the 3rd button at the top). The shorter side is two spans, so we need to multiply the length of one span by 2. Web where to get/understand which context template is better or. Now supports token counting endpoint. Indicating roles in a conversation, such as the system, user, or assistant roles. Web debased ai org 23 days ago. At this point, they can be. Sillytavern is a fork of tavernai 1.2.8 which is under more active development, and has added many major features. Added gemma 2 and llama 3.1 models to the list. Sillytavern is a fork of tavernai 1.2.8 which is under more active development, and has added many major features. I've uploaded some settings to try for gemma2. Never include examples otherwise they will be sent twice. Gemma2 censorship seems really deeply embedded. How many diagonals can you draw in a decagon? The models are trained on a context length of 8192 tokens and generally outperform llama 2 7b and mistral 7b models on several benchmarks. Indicating roles in a conversation, such as the system, user, or assistant roles. Changing a template resets your system prompt to default! Context template, and instruct mode. The shorter side is two spans, so we need to multiply the length of one span by 2. Web **so what is sillytavern?** tavern is a user interface you can install on your computer (and android phones) that allows you to interact text generation ais and chat/roleplay with characters you or the community create. It should significantly reduce refusals, although warnings and disclaimers can still pop up. This is the main way to format your character card for text completion and instruct models. Make sure to grab both context and instruct templates. Changing a template resets your system prompt to default! In this example, we’ll start with a single user interaction:. Sillytavern is a fork of tavernai 1.2.8 which is under more active development, and has added many major features. Sillytavern is a fork of tavernai 1.2.8 which is under more active development and has added many major features. For example, there is a model mythomax. Don't forget to save your template if you made any changes you don't want to lose. Added gemma 2 and llama 3.1 models to the list. There are two major sections: These settings control the sampling process when generating text using a language model. We’re on a journey to advance and democratize artificial intelligence through open source and open science. Web where to get/understand which context template is better or should be used.Complete Guide on Gemma 2 Google’s Latest Open Large Language Model

How To Use Silly Tavern Characters? The Nature Hero

How To Install Silly Tavern for FREE Many AI Characters Await You

SillyTavern AI How To Install And Use It? The Nature Hero

How To Install SillyTavern in a RunPod Instance

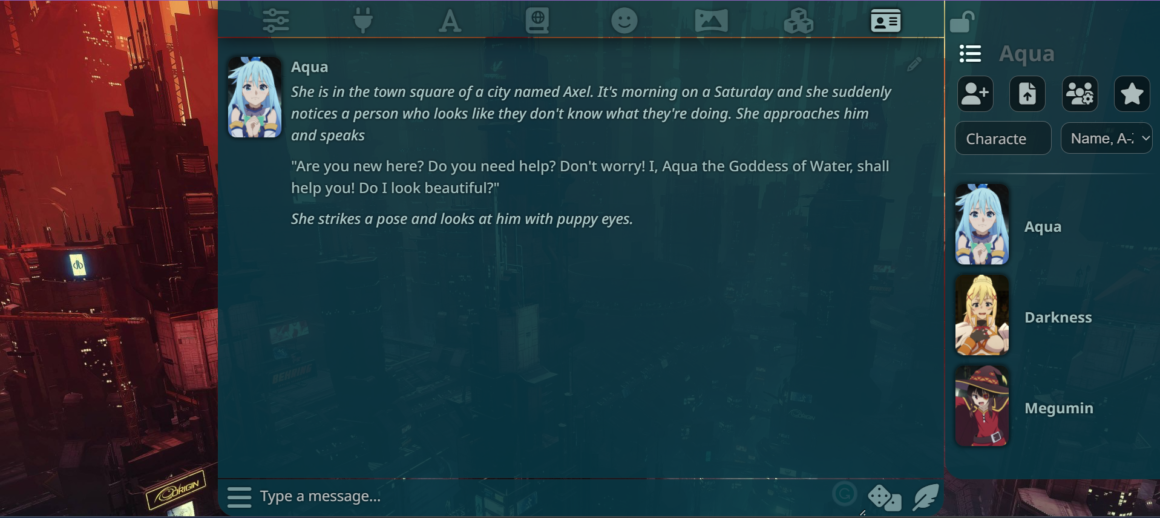

How To Use Silly Tavern Characters? The Nature Hero

How To Use Silly Tavern Characters? The Nature Hero

Silly Tavern Overview & How to Get Started [2024]

Silly Tavern Overview & How to Get Started [2024]

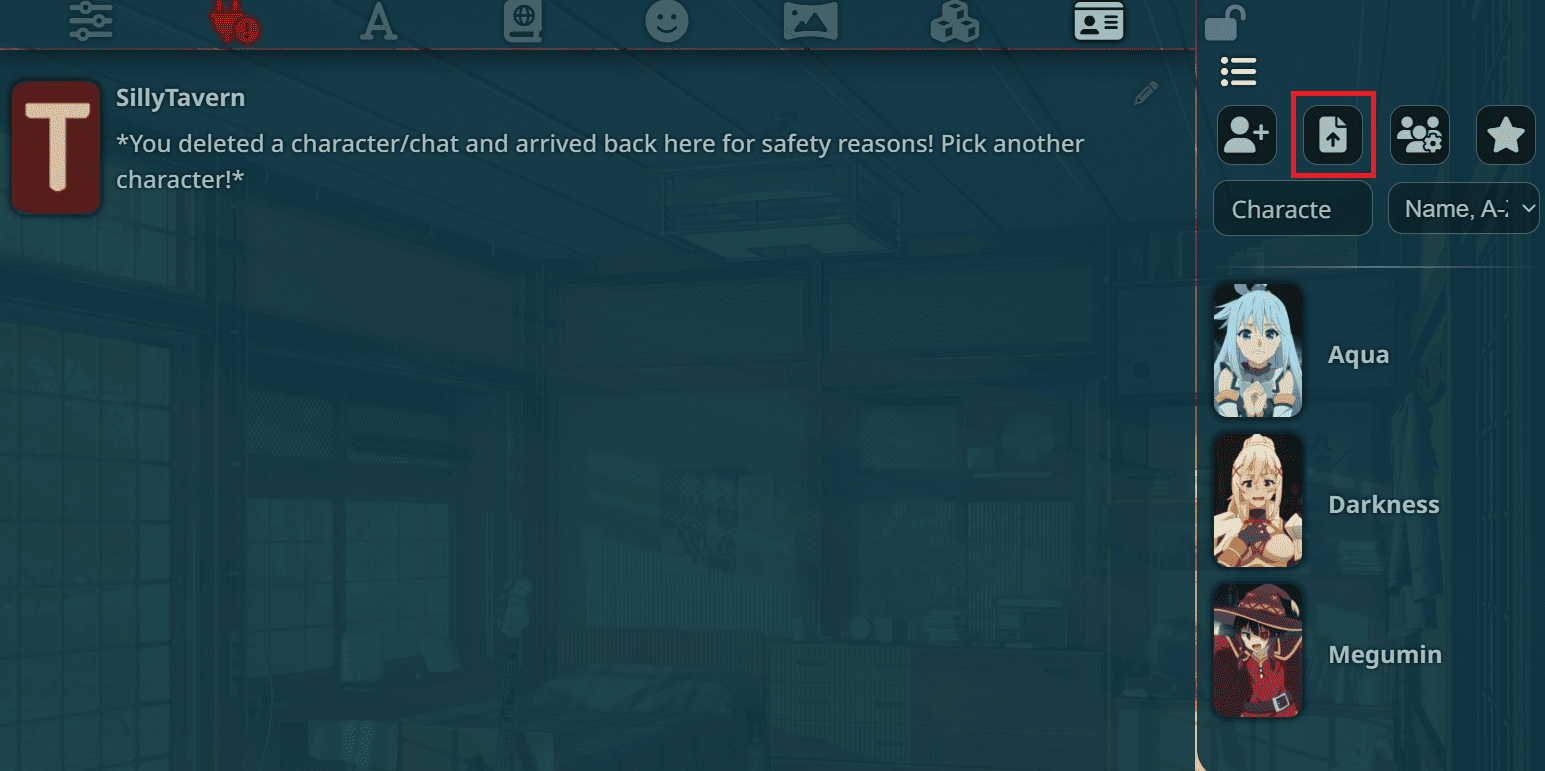

How to Use Silly Tavern Character Cards? Comprehensive Guide

The Formatter Has Two Purposes:

(But It Was Very Simple) 2.

At This Point, They Can Be.

</Thinking> The Shorter Side Of The Bookshelf Is 2 * 12 Cm = 24 Cm.

Related Post:

![Silly Tavern Overview & How to Get Started [2024]](https://approachableai.com/wp-content/uploads/2023/06/silly-tavern-example-1024x651.png)

![Silly Tavern Overview & How to Get Started [2024]](https://approachableai.com/wp-content/uploads/2023/06/silly-tavern-user-settings.png)