Langchain Prompt Template The Pipe In Variable

Langchain Prompt Template The Pipe In Variable - A prompt template consists of a string template. Langchain supports this in two ways: This is a list of tuples, consisting of a string (name) and a prompt template. A list of tuples, consisting of a string name and a prompt template. Includes methods for formatting these prompts, extracting required input values, and handling partial prompts. The fermi /gbm light curve of grb 231115a (black), binned at a temporal resolution of counts per 3 ms, with the background model in green. Prompt object is defined as: Class prompttemplate<runinput, partialvariablename> schema to represent a basic prompt for an llm. In this post, i’ll discuss the popular indexing strategies i frequently use for better document retrieval. A list of tuples, consisting of a string name and a prompt template. Web a prompt template consists of a string template. Class that handles a sequence of prompts, each of which may require different input variables. Web prompt template for a language model. Web that’s a list long enough to go to a separate future post. Class prompttemplate<runinput, partialvariablename> schema to represent a basic prompt for an llm. Each prompt template will be formatted and. A pipelineprompt consists of two main parts: You’ll see why in a moment. Tell me a {adjective} joke about {content}. is similar to a string template. Partial formatting with string values. Web the prompttemplate class in langchain allows you to define a variable number of input variables for a prompt template. List [tuple [str, baseprompttemplate]] [required] ¶ a list of tuples, consisting of a string (name) and a prompt template. Web a dictionary of the partial variables the prompt template carries. Web a prompt template consists of a string template. A list of tuples, consisting of a string name and a prompt template. When you run the chain, you specify the values for those templates. New prompttemplate< runinput, partialvariablename >(input): The final prompt that is returned; Prompt templates serve as structured guides to formulating queries for language models. Instructions to the language model, a set of few shot examples to help the language model generate a better response, a question to the language. It accepts a set of parameters from the user that can be used to generate a prompt for a language model. Web prompt template for a language model. Web one of the use cases for prompttemplates in langchain is that you can pass in the prompttemplate as a parameter to an llmchain, and on future calls to the chain, you. Pydantic model langchain.prompts.baseprompttemplate [source] # base prompt should expose the format method, returning a prompt. Web one of the use cases for prompttemplates in langchain is that you can pass in the prompttemplate as a parameter to an llmchain, and on future calls to the chain, you only need to pass in the. A prompt template consists of a string. A pipelineprompt consists of two main parts: Web prompt template for a language model. When you run the chain, you specify the values for those templates. This is the final prompt that is returned. You’ll see why in a moment. Prompt object is defined as: Instructions to the language model, a set of few shot examples to help the language model generate a better response, a question to the language. Adding variables to prompt #14101. I do not understand how customprompt works in the example documentation: Class that handles a sequence of prompts, each of which may require different input. This is my current implementation: New prompttemplate< runinput, partialvariablename >(input): You’ll see why in a moment. Each prompt template will be formatted and. Web a dictionary of the partial variables the prompt template carries. I do not understand how customprompt works in the example documentation: List[str] [required] # a list of the names of the variables the prompt template expects. Includes methods for formatting these prompts, extracting required input values, and handling partial prompts. Web prompt template for a language model. This is my current implementation: Partial formatting with string values. List [tuple [str, baseprompttemplate]] [required] ¶ a list of tuples, consisting of a string (name) and a prompt template. Each prompt template will be formatted and. Prompt templates serve as structured guides to formulating queries for language models. Prompt object is defined as: Prompttemplate produces the final prompt that will be sent to the language model. Prompt object is defined as: When you run the chain, you specify the values for those templates. Richiam16 asked this question in q&a. It accepts a set of parameters from the user that can be used to generate a prompt for a language model. In this post, i’ll discuss the popular indexing strategies i frequently use for better document retrieval. The fermi /gbm light curve of grb 231115a (black), binned at a temporal resolution of counts per 3 ms, with the background model in green. I do not understand how customprompt works in the example documentation: Partial formatting with string values. Class prompttemplate<runinput, partialvariablename>. Web a prompt template consists of a string template. Web prompt template for composing multiple prompt templates together. Langchain supports this in two ways: It accepts a set of parameters from the user that can be used to generate a prompt for a language model. Web for example, you can invoke a prompt template with prompt variables and retrieve the. Web langchain includes a class called pipelineprompttemplate, which can be useful when you want to reuse parts of prompts. You can define these variables in the input_variables parameter of the prompttemplate class. A list of tuples, consisting of a string name and a prompt template. Web prompt templates can contain the following: It accepts a set of parameters from the user that can be used to generate a prompt for a language model. This can be useful when you want to reuse parts of prompts. Web for example, you can invoke a prompt template with prompt variables and retrieve the generated prompt as a string or a list of messages. The final prompt that is returned; These techniques are, however, specific to rag apps. Runinput extends inputvalues = any. In this post, i’ll discuss the popular indexing strategies i frequently use for better document retrieval. It accepts a set of parameters from the user that can be used to generate a prompt for a language model. Import { prompttemplate } fromlangchain/prompts;constprompt = newprompttemplate( {inputvariables:. Prompttemplate < runinput, partialvariablename > type parameters. New prompttemplate< runinput, partialvariablename >(input): You’ll see why in a moment.A Guide to Prompt Templates in LangChain

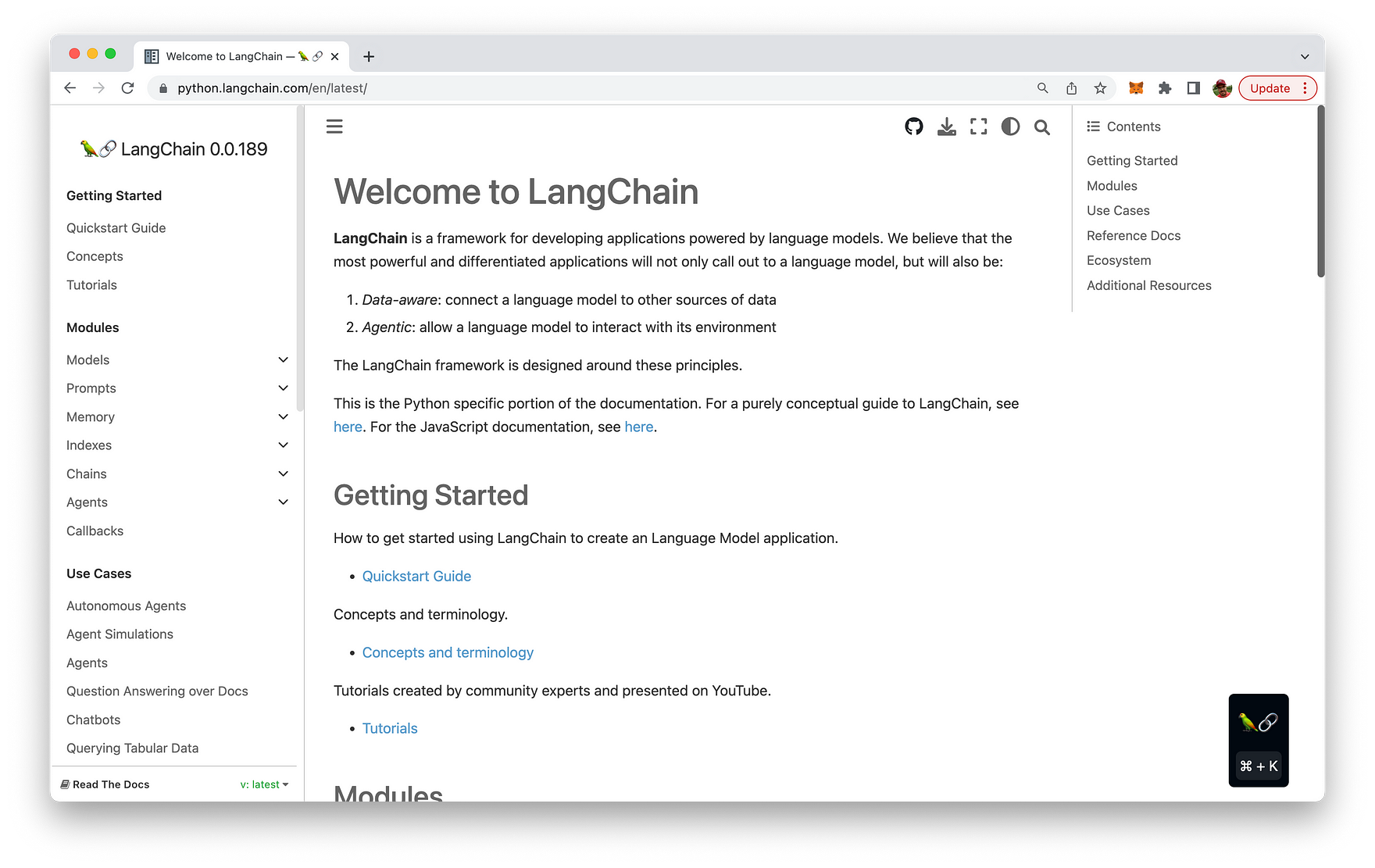

How to work with LangChain Python modules

Langchain Prompt Templates

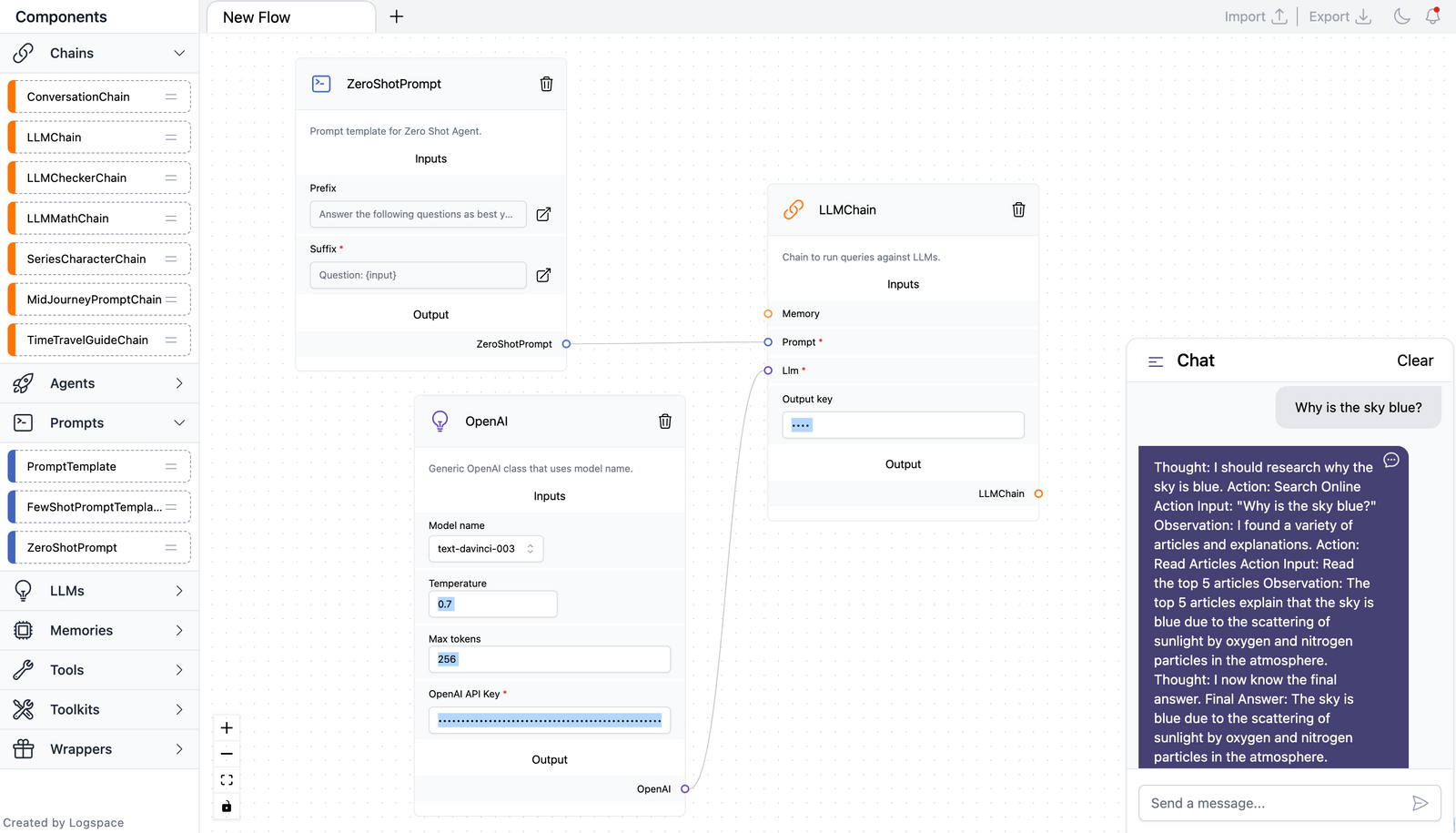

Prototype LangChain Flows Visually with LangFlow

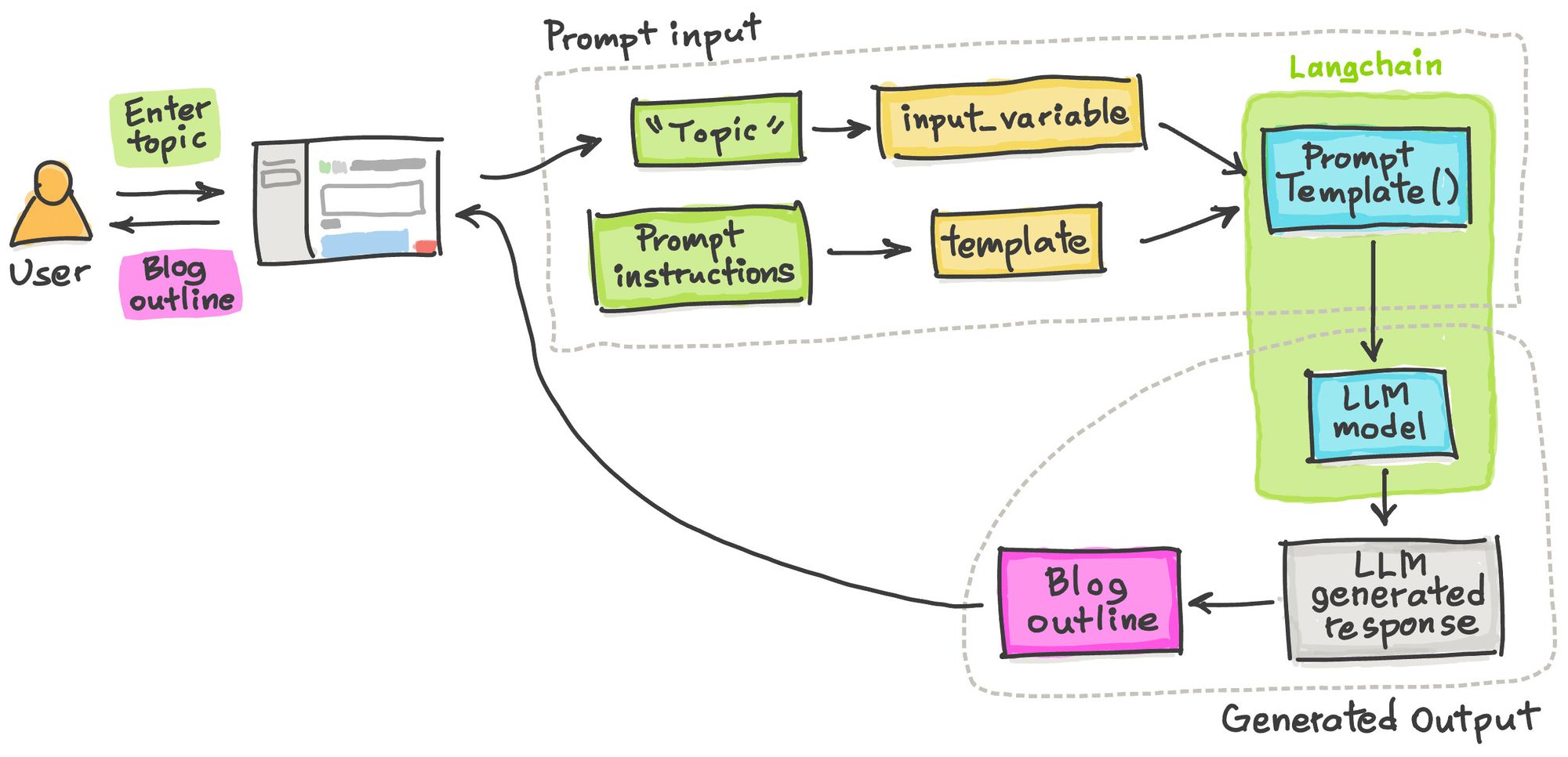

LangChain tutorial 2 Build a blog outline generator app in 25 lines

LangChain Nodejs Openai Typescript part 1 Prompt Template + Variables

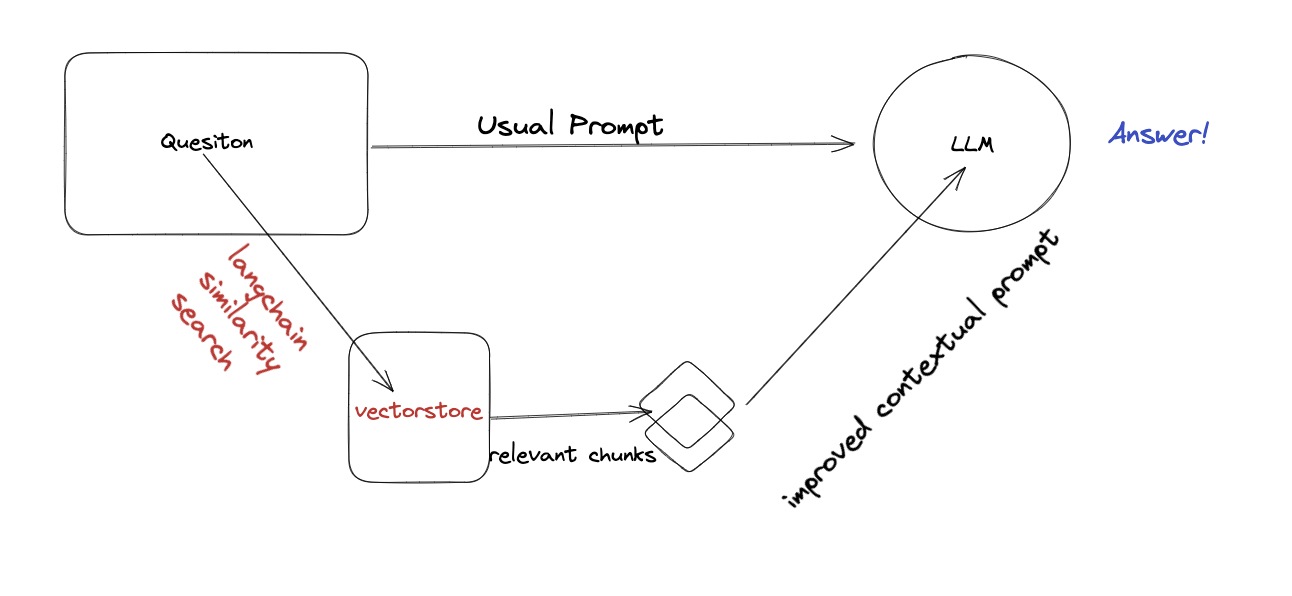

Langchain & Prompt Plumbing

Mastering Prompt Templates with LangChain

Understanding Prompt Templates in LangChain by Punyakeerthi BL Medium

Unraveling the Power of Prompt Templates in LangChain — CodingTheSmartWay

This Is My Current Implementation:

It Allows Us To Pass Dynamic Values.

Each Prompt Template Will Be Formatted And.

Each Prompt Template Will Be Formatted And Then Passed To Future Prompt Templates As A Variable With The Same Name.

Related Post: